Back to Home

Loading...

gpt-oss and best local ollama models

This contains a deep list of all the open source, models, that I've tried and tested.

K

@krishbhagwat

GPT-OSS

OpenAi's latest open source model, best for 16GB RAM users. You can either use OpenWEBUI or Ollama has recently added their own UI as well, just go with that.

How To Downloasd:-

- Go to ollama.com.

- Download the Ollama app according to your OS.

- At the top tabs click on models, choose 'gpt-oss' or simply

click heredownload the model with 20b parameters. - Beware not to run the larger model.

- If not sure about which model would work best for you system, just visit chatgpt, add your system preferences, and use case.

For 8GB RAM...

Chat & Coding (Small + Smart)

Model Name

Click on the names to get redirected to their download page.

- Gemma 2B:- ~2.5GB mostly for Chat, reasoning. Has strong logic.

- Mistral 7B (Q4):- ~4.1GB use it for Chat/coding great speed on low end devices.

- Phi-2:- ~1.7GB best for Lightweight chat, its a small but smarter model.

Code-Specific Models

- Code Llama 7B (Q4):- ~4.2GB

- DeepSeek Coder 1.3B:- ~2.2GB

Summarization, Docs, Productivity

- Phi-2:- ~1.7GB Its great at summarizing small-medium texts

- Mistral 7B (Q4):- ~4.1GB Good at summarization + multitasking

Tips for Running Smoothly on 8GB

- Use quantized versions (Q4_0, Q4_K_M) - smaller, faster

- Close other memory-heavy apps (Chrome, VSCode, etc.)

- Run via terminal or lightweight Ollama UI to conserve RAM

- Avoid multiple concurrent sessions

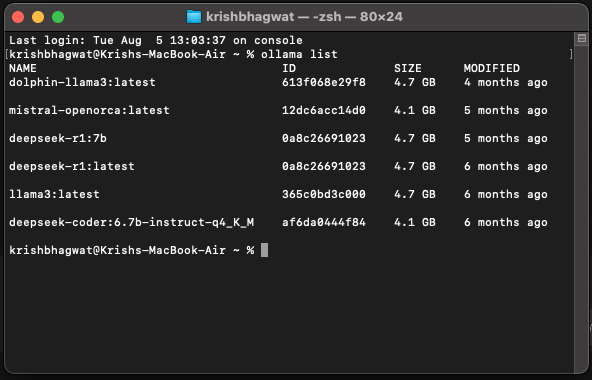

Models that I use

K